We’re demystifying skin cancer- so you can eliminate all the fear associated with your regular dermatologist appointments.

Today, about 9,500 people were diagnosed with skin cancer in the United States. The same was true yesterday, and it will be true tomorrow, as well. Statistically, one in every five Americans will develop skin cancer by age 70. This means skin cancer removal is far more common a procedure than you think it is.

These aren’t small numbers, but we’ve got some great news: when skin cancer is diagnosed early (like at your annual dermatologist visit) it is highly treatable and survival rates are some of the best when compared to other cancers. What usually happens is that the skin cancer is identified and entirely removed—sometimes even right there in the office at your appointment.

Curious about what it’s like to experience skin cancer removal, we spoke to a dermatologist, as well as two women who’ve gone through this experience themselves. Grab your sunscreen and get ready for an informative ride!

How is Skin Cancer Identified in the First Place?

There are three primary ways skin cancer is identified. Let’s discuss all three.

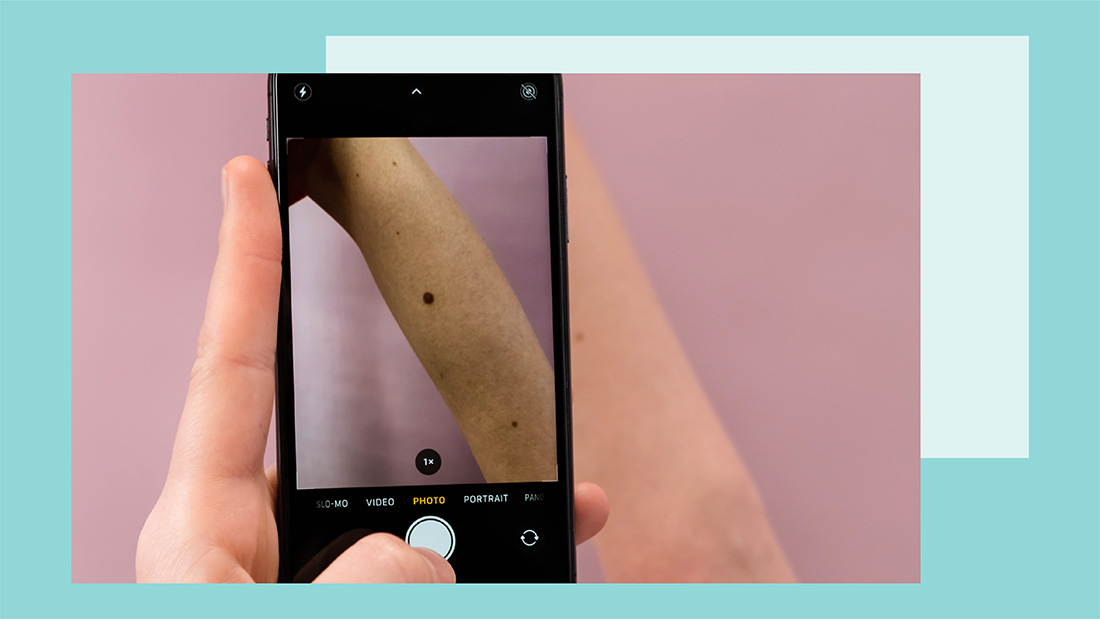

The first is via your own naked eye when doing self-checks at home. Knowing the ABCDE’s of skin cancer can help with this. These include asymmetrical shapes, uneven/jagged borders, very dark or multiple colors, diameter greater than 6mm., and evolution or changing of appearance. If you notice any of these things, a dermatologist visit is imperative. You may not have cancer, but you’ll want to get a medical opinion.

The second way to identify skin cancer is via your dermatologist’s naked eye. They know exactly what to look for and can examine areas of your body that aren’t easily accessible by your own eyes. In many cases, the potential skin cancers your doctor identifies are exactly that—potential.

This leads us to biopsies, which are the third way to identify skin cancer.

“Any suspicious lesion should be ‘biopsied,’ meaning that a representative sample of the lesion [is scraped or cut away] and sent to the lab for definitive diagnosis under the microscope,” explains board-certified dermatologist Dr. Kenneth Mark. “More often than not the full ‘clinical’ lesion is removed, but that does not mean that microscopically it is completely removed or cured.”

Once the biopsy results come back, you’ll know whether the lesion was benign (harmless) or if it was skin cancer. The biopsy will also confirm the type of skin cancer, which will inform the next steps.

The Biopsy and Skin Cancer Removal Process

The most common forms of skin cancer are basal cell and squamous cell carcinoma, which is followed by melanoma. Fortunately, almost every single skin cancer can be excised (cut away). Even the most aggressive skin cancers, such as melanoma, are not typically treated with chemotherapy. Only in extreme cases will more intense treatments be required.

“Simple basal and squamous cells can be treated with destruction methods or simple excision. However, the optimal cure rate for basal and squamous cell carcinoma is obtained via Mohs surgery, a technique in which 100% of the cancer margins are checked under the microscope while the patient is in the office,” explains Dr. mark. “Melanomas are typically treated via excision with ‘wide and deep’ margins.”

We know—having your skin cut doesn’t seem very pleasant. That said, skin cancer removal is way better than the alternative (letting skin cancer grow) and it’s actually not as bad it sounds.

Tess Day, a 39-year-old woman based in Seattle, has had many dermatology visits and many biopsies since her father passed away from metastatic melanoma in 2007. Over the year she’s also had a few severely abnormal moles removed and re-sectioned, but in 2017 she received a melanoma diagnosis.

“It was summer, and I had been wearing a tank top, and a spot on my upper back kept catching my eye in the mirror,” she tells The Klog. “I couldn’t really put a finger on why I kept noticing it, except perhaps it had changed. I went to my dermatologist to get it checked out. She looked at it and said it looked fine to her, but [she performed] a ‘punch biopsy’ just to double-check.”

To both her and her doctor’s surprise, the results came back as stage zero melanoma.

“Because my cancer was only stage zero, they did not have to do a biopsy of my lymph nodes, but they did feel them prior to the surgery. They numbed the area on my back and spent about an hour carefully taking out a large portion of skin and then stitching it back up,” she recalls. “They sent the tissue out to make sure that it had clear margins. Fortunately, it did, and they confirmed all the cancer had been removed.”

The affected area was locally numbed to ensure Tess couldn’t feel the cutting. Afterward, the area was carefully stitched up so it could heal. In some cases, a biopsy or Mohs surgery can create some lingering tenderness or pain, but it’s not severe. Some areas are naturally more sensitive, like your scalp or nose, so they’re more likely to be sensitive.

You can liken the experience to having a tooth cavity filled, which also involves a numbing treatment and removal of the abnormal area.

The Importance of Practicing Caution

Melanoma is one of the deadliest forms of skin cancer, and Tess was able to catch it at stage zero because of her diligence. In addition to wearing sunscreen every day, annual dermatology visits (or quarterly/semi-annual depending on your family’s history or your complexion) are incredibly important to your health.

“As someone who has a lot of moles and freckles all over my body, my mom started taking me to the dermatologist at a young age. I went sporadically throughout my youth but now go every 6 months,” says Marissa McLean, a Chicago-based teacher in her late 20s. “While I used to be pretty apprehensive about appointments, I find going regularly puts me at ease. The appointments are pretty low key. The doctor will ask if I have noticed any changes in my skin and then do a full skin evaluation looking closely at any concerning moles or areas. If she suspects something looks a little off, she’ll take a small biopsy there in the office.”

While many of her biopsies have come back normal—allowing her to move ahead until her next appointment—some have required her to come back to the office to remove more skin.

“This process is a little more intense, but it is still a pretty easy office visit. They will then send that to the lab to make sure they have removed all of the abnormal cells,” she says. “While this felt pretty scary the first couple of times, I’m so much happier knowing they have removed everything abnormal before it turned into something more.”

Tess feels the same way about that sense of relief. She stresses the importance of being your own health advocate by doing self-checks, going to the dermatologist regularly, and pressing for a biopsy even if the doctor doesn’t think it’s necessary.

“I credit what happened to my dad as the reason I paid attention, asked for a biopsy, and caught [my melanoma] early,” she says. “Make sure you find a dermatologist who believes that a patient knows their own body better than they do—and wear your sunscreen!”

One Last Word

Our goal in writing this isn’t to freak you out. Rather, we’re all about spreading awareness about the importance of regular sunscreen application and routine dermatology visits. We also want to emphasize the importance of self-checking (remember the ABCDE’s). Skin cancer is highly treatable, especially when it’s caught early!